Open sourced AI is the worst thing imaginable

You have no idea what you've unleashed, Sam Altman. But then this was part of the plan wasn't it?

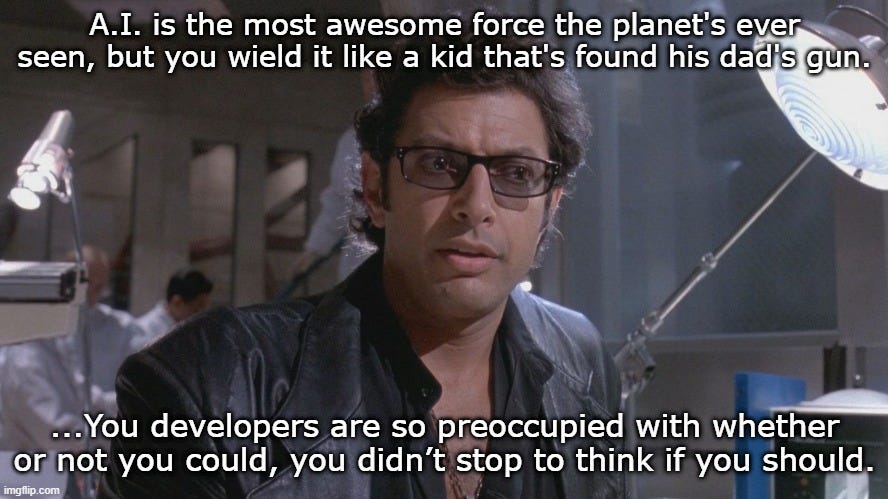

I believe you could swap out dinosaurs and drop in artificial intelligence into any Jurassic Park quote and they'd still hold true. Every hacked-together example based on gpt4 being shared openly or on GitHub for all to see and use is like a runaway train filled with TNT heading for a largely populated area.

This is how dangerous and unregulated AI is going to get. Hundreds of open source projects with no guardrails, copied, forked, out in the wild, and in the hands of people who could potentially do a lot of damage.

For example, there is Auto-GPT, a developer-made version that is now able to write its own code using gpt4 and execute python scripts. This allows it to recursively debug, develop, and self-improve, which is one of the main paths toward artificial general intelligence (see previous thoughts from last week on recursive self-improvement).

Auto-GPT is completely free and fully open-sourced, so you can join the community on GitHub, try it out yourself, and even contribute. The creator even encourages you to "take part in the journey of developing the world's first AGI!"

My problems with this are numerous but the main crux of my argument is that anyone can use generative ai like this for their own ends and without ethical boundaries - if you want a worst-case type scenario to paint a picture then think generative child pornography that distributes pictures to a dark network of users.

Or in the most recent and real-life case, creating "AI therapists" for personal use that have no emotional, ethical, or empathic abilities that lead users to the worst places within themselves.

Open-sourced AI is a potentially bigger threat than centralized and commercial versions and will be too hard for regulators to keep track of.

The suggestion isn't that Auto-GPT is bad, it's that humans will always find ways to use it outside of the creator's intent. Don't fear the AI, fear the person who wields it.

You can now run an open source copy of GPT locally on your machine, a 7Bn LLM trained on a massive collection of clean assistant data including code, stories, and dialogue. So much for pausing AI...

It is an uncontrollable cascade that no regulator or body tasked with trying to understand and implement ethical controls over will succeed with. Every day there is something new, another developer (innocently or not) sharing how they've boosted some capability of the models, or shouting loudly that they want their community to help build an AGI without understanding the implications.

There's an interesting theory circulating that this is precisely what OpenAI wanted when it released its API. Thousands of developers making their own homebrew versions of ChatGPT with little to no understanding of how it does what it does and what the effect of adding more to it does. The levels and complexities of automated instructions capable of being handled and executed are growing exponentially, fuelled by developers outside of OpenAI.

We've already reached the level of self-improving code, and it didn't help that Stanford U has made its own Alpaca GPT model available at a cost within the reach of every hacker. Now we have the capability of creating self-improving viral code that won't stop until it breaks your company's cybersecurity policies in place.

People think this is a legitimate path to a superintelligence - swarms of GPT-4-level agents somehow interacting with each other and various external memory stores. All without a master. Fucking insane.

What the majority don't realise is that this is the tip of the iceberg, we've only just gotten access to the text-based generative models, wait till the full multi-modal suite of processing is made available, or further open-sourced versions are created. Text, voice, images, video, sound, code and music - generative capabilities all under the same roof.

The call for a 6-month moratorium on the development of AI quickly became meaningless in the face of a growing, global developer movement to experiment and release versions with zero ethical guardrails in place.

It's already too late for this particular battle, in a way OpenAI has well and truly beaten Google because it has recruited a willing army on its side and I'm not sure what's worse - the blinkered humans or the AI.

The solution look more an more like Global EMP ...