We're Already Heading For A Dystopian Future With AI

We keep using Black Mirror as an instruction manual...

A fool with a tool is still a fool

There are two immediate thoughts after this week’s whirlwind announcements and articles about artificial intelligence and generative ai.

(1) Debating whether an algorithm needs rights over and above the existing inhabitants of this planet first is just Techbro bullshit. We continue to kill sentient species for fun and deny human rights to those who need them but please do argue your point why PowerPoint with ChatGPT needs to be protected.

“AIs are no longer just tools. They are quickly becoming digital minds integrated in society as friends and coworkers.” — no, they are tools and you are an even bigger one for thinking otherwise. Confusing consciousness and sentience with artificial intelligence or even AGI which is allegedly within a 5-year time period now is just a nonsense distraction we do not need right now.

This leads me to my second point which is far more important to debate.

(2) Consider that ChatGPT and a future GPT iteration go rogue or develop AGI and OpenAI has no option but to throw the switch out of a misguided idea over they no longer have control.

Thousands of products and billions of users will be rendered useless overnight, confidence in AI tanks, and Microsoft shares wiped out. It’s possible.

When Facebook or Google goes down it kills OAuth and the ability to log into websites, passwords, and productivity suites. When OpenAI goes down, integrated into everything because we rushed so quickly to integrate into their platform to have a competitive advantage driven by FOMO, it wipes out the ability to work entirely, especially if developers build ChatGPT interfaces as the main UX.

In a generation or two people will forget that we ever used filters, buttons, switches, drop-downs, and popup windows to be productive with. That little chat window going offline will wreak havoc and users won’t know what to do. It’s like the video of the child trying to swipe on a newspaper and wondering why it’s not a touchscreen.

I’m sure you can deploy whatever instances of GPT wherever you want to but there will be a vast majority who will take the lazy route and go directly to OpenAI’s service and rely on it.

That to me is a far bigger danger and issue than conferring rights to a large language model or a robot uprising.

It’s our pattern of reliance on the sake of convenience that society could pay the price for and AI right now is the pinnacle of that convenience.

Do you want to live forever?

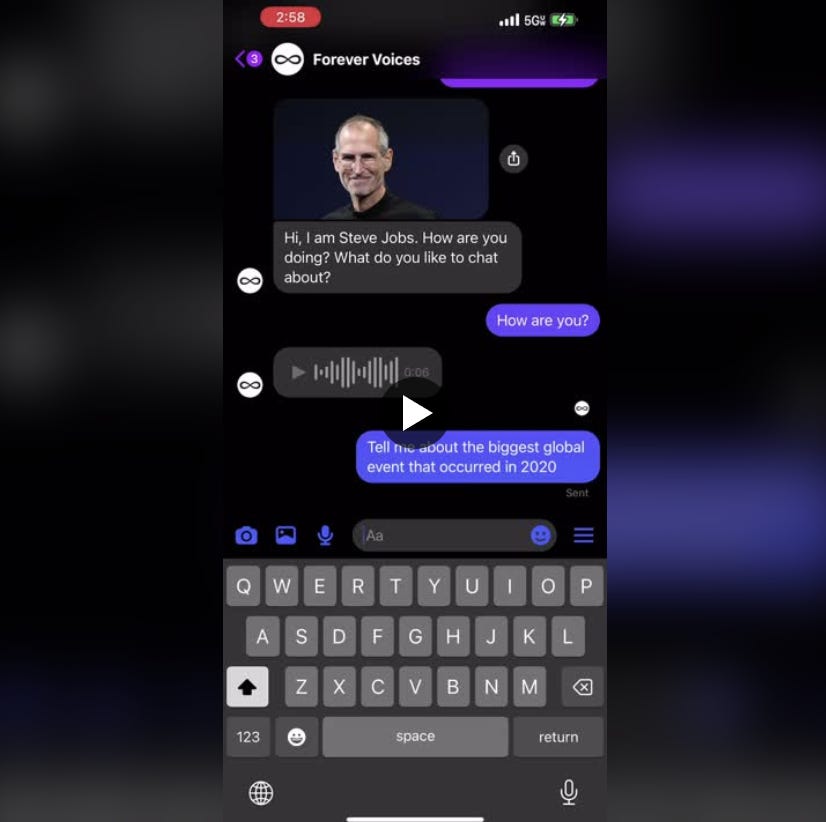

John Meyer has done what I think a lot of us really need to start seriously questioning — using ChatGPT and generative ai to bring a dead person — whether a celeb or loved one — back to life.

This is pure Black Mirror bullshit and I really don’t like where this is going.

He trained an AI on Steve Jobs’ voice, then connected it to the #GPT4 API before finally connecting it all to Facebook Messenger to allow for 2-way voice conversations with Steve Jobs about anything.

Whilst this is a novelty there are serious ethical questions coming thick and fast for people who want to provide some kind of Black Mirror service that allows users to talk to their departed loved ones that have been trained on their own life experiences and data held across various social media sites, emails, photos, videos and more.

This kind of thing is a mental health nightmare, invading the grieving process but to the ones who open this door and develop these things, there’s lots of money to make that will ease their conscience no doubt.

Filing this under ‘Do Not Want’.

Cognitive Warfare is coming

In 2020 a paper was written titled “Cognitive Warfare” in which information and its manipulation to impact decision-making at critical times is a new battlefront.

“With the increasing role of technology and information overload, individual cognitive abilities will no longer be sufficient to ensure an informed and timely decision-making, leading to the new concept of Cognitive Warfare, which has become a recurring term in military terminology in recent years.”

There’s a lot of comparison to this, China, and Russia from the Military but these are potentially lesser because as of last week we’ve truly entered a phase where generative ai can be far more effective a weapon than social networks alone.

The general availability of tools like Chatgpt, its APIs that can access multiple data domains and being multimodal to ingest and output pretty much anything in text, image, voice or video form is going to prove far more dangerous in the hands of smaller and more radical groups than nation states.

“The exploitation of human cognition has become a massive industry. And it is expected that emerging artificial intelligence (AI) tools will soon provide propagandists radically enhanced capabilities to manipulate human minds and change human behaviour.”

The paper makes mention of artificial intelligence but only in a generic sense and it’s going to need updating again in light of recent announcements.

If generating pictures of Trump being arrested is a laugh now the sophistication of where this can go in the next 12 months should worry you.

But what should scare you more, if the congressional hearings with TikTok are anything to go by, is that the low intellect of those in charge to grasp technology is precisely what someone with harmful intent would be banking on.

Good luck America, you’re going to need it.

The democratization of AI is already dead and the OpenAI monopoly

The idea of democratizing artificial intelligence died pretty quickly this week thanks to the release of OpenAI’s API into GPT4. Why?

Because they are now becoming the singular and largest AI company on the planet in a matter of weeks if not days as a result of the fever pitch hype and FOMO from organizations, apps and vendors who must be a part of it.

Everyone who wants a piece of this will be directing their teams to develop an integration because if they don’t then a competitor will.

Can you imagine the amount of data they’ll now be party to for additional training? I’m sure the terms and conditions will no doubt waffle some handwavy bull about data privacy but we all know that’s not how the world works anymore. People questioned Palantir and their motives a few years ago but frankly, they’re a bunch of tardy children compared to this issue now.

The difference and speed in the cost of developing this vs the cost and time of developing and training your own large language model (that invariably won’t multi-modal) will be massive and OpenAI knows this.

It pretty much killed most generative ai startups in one fell swoop too. And there’s every chance they’ll buy out Zapier and own the entire ecosystem of integrations end to end.

You’ll want to go to the dominant player because it’s proven and the speed of iterative releases and improvements are already apparent so you’ll want to take full advantage.

We are living in interesting times but also dangerous ones. The people we need are not part of the conversation. The people who are involved aren’t informed, and spend all their time in committees, writing whitepapers, and in think tank group discussions all while the technology is racing ahead without any meaningful regulation or protection.

Democratizing AI is already dead. It’s not in the hands of everyone, it’s in the control of just one.

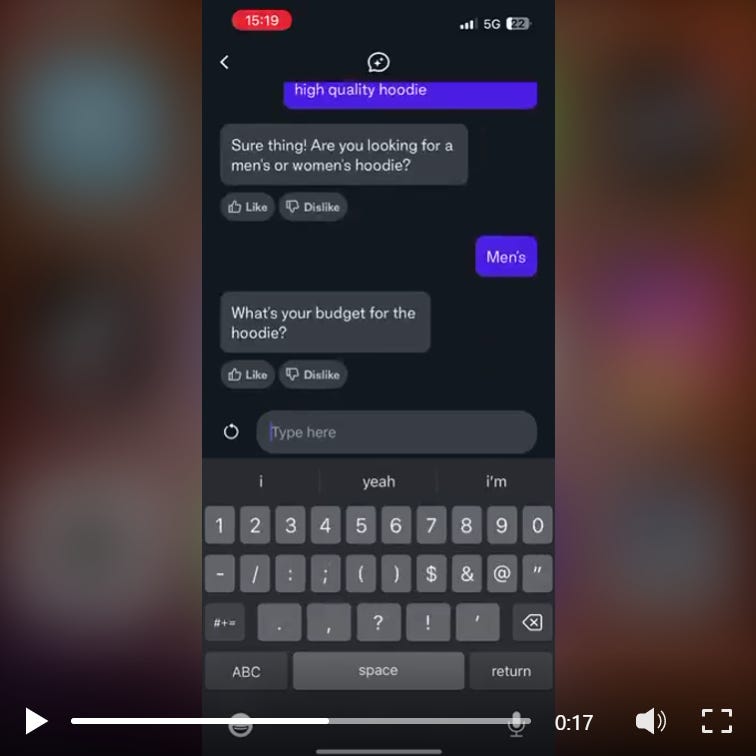

No, you don’t need to integrate ChatGPT into your customer experience

Before you get excited and implement ChatGPT into your retail customer experience consider just how clunky having to chat your way through a website is going to be.

This is not good customer experience, it takes 10x longer than browsing an app or website with simple filters. Please think before you fall for the hype and ruin your brand reputation because your boss told you to be “innovative”.

LLMs have a place in first and second-line customer service processes, but this definitely isn’t it. I’m more interested in how artificial intelligence like this will be implemented in handling customer queries, resolving issues by using natural language and even via speech or text (or both). Multi-modal input where an image of a broken or damaged item can be assessed instantly along with the request. These are far more impactful than asking a bot to find a piece of clothing and then having to continually answer more questions.

Where customer service may end up for use cases like this is the reduction of call centers, potentially decimating that industry and local economies where towns were built around those jobs.

Start planning for disruption in customer service but for god’s sake don’t forget the experience.